Main researcher: Michał Bujacz, Ph.D.

The authors’ main goal was to develop a device that would deliver as much useful information as possible, but in a very simple, easily understandable way. To make it possible, it is necessary to use a rich input and various computer algorithms to reduce the amount of data at the output to a necessary minimum.

The ETA solution proposed by the authors utilizes stereovision input, and a spatial sound output. Stereovision was chosen as the scene acquisition method because of its inexpensiveness, passiveness and the authors’ image processing experience.

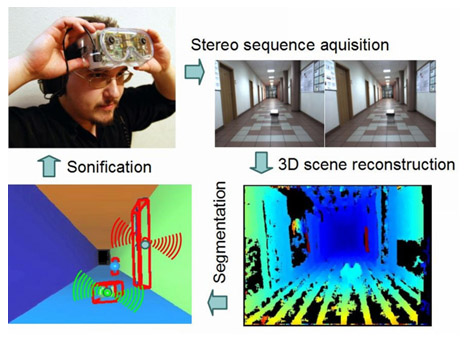

The reconstructed 3D scene undergoes segmentation in order to prepare a parametric scene model for sonification. The concept is illustrated on Figure 1. The segmentation algorithm consists of two main steps: firstly, all major planes are detected and subtracted from the scene, and secondly, remaining scene elements are approximated by cuboid objects. Plane detection is an iterative process in which surfaces defined by triangles formed by points in the depth map are merged with similar neighbors. After the threshold of similarity is not met by any triangles, the remaining points are grouped into clouds, and the centers of mass and dimensions of the objects are calculated. The default model used for input of the sonification algorithm was limited to four surfaces, described by plane equations, and four objects, described by position, size and rotation parameters.

Simulation trials and surveys with 10 blind testers served to shape the final version of the sonification algorithm. The main concept was to translate the parameters of segmented scene elements into parameters of sounds, in a way that was simple, instinctively understandable and pleasant to the ear. The chosen assignment was to represent the distance to an object with pitch and amplitude of the assigned sound. Sound duration was made proportional to the size of an object. Using personalized spatial audio (the measurement equipment is shown in Fig. 3), the virtual sound sources are localized to originate from the directions of the scene elements. Different categories of elements can be assigned different sound/instrument types. The sounds are played back in order of proximity to the observer.

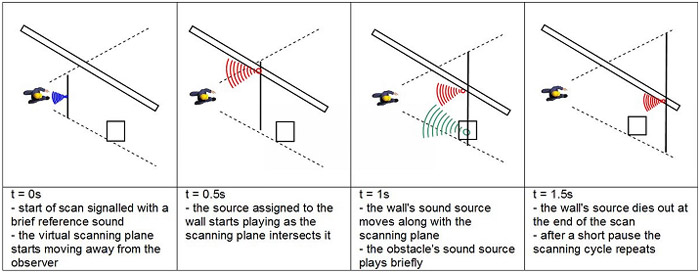

The sonification process, dubbed “depth-scanning”, can be illustrated by a virtual scanning plane as illustrated in Figure 2. As the plane moves away from the observer, it releases the playback of the virtual sources assigned to scene elements. When sonifying walls, the sound continues as long as the plane intersects the wall and the virtual source moves along its surface at eye-level. The default scanning period has been set to 2 s, with 0.5 s of silence between consecutive scans.

The sound selection was influenced by the concepts of stream segregation and integration as proposed by Bregman, as the authors’ intent was to make individual virtual sources easily segregated from the entirety of the device’s stream. The instruments were chosen to differ spectrally in both pitch and tone, and a minimum delay between the onsets of two sounds was set to 0.2 s. The final selection of sounds was also influenced by preferences of surveyed blind testers during trials presented in Section 5.

To allow efficient modular design of the ETA prototype software, the sonification algorithm was ported to the Mircrosoft DirectShow (DS) multimedia framework technology. Modular architecture is useful when a group of programmers separately develops different aspects of the project, as it was in this case. DirectShow’s advantage is its multimedia oriented design, allowing for fast exchange of buffers of various data types, in our case first stereovision images, depth maps and parametric scene descriptions.

The latest progress of the research was the design and construction of a portable ETA prototype. The purpose of the prototype was to provide real-time conversion of video input into a sonified output. The video source was the commercially available Point Grey Bumblebee2 Firewire stereovision module in a custom head-mount. A mid-range laptop PC performed the necessary data processing and could be carried in a special backpack (however, it was more comfortable to perform most tests on a cable tether).

The pilot study was to test obstacle avoidance and orientation in real world scenes. For the safety of the trial participants, the tests were conducted in a controlled environment. Colored cardboard boxes were used as obstacles, and their texturing guaranteed very accurate stereovision reconstruction and smooth operation of the segmentation algorithms.

The trial participants were 5 blind and 5 blindfolded volunteers for whom personalized HRTFs were previously collected. Each group consisted of three men and two women, aged 28-50. The volunteers tested the efficiency of obstacle detection, avoidance and orientation in a safe environment. Trial times, collisions and scene reconstructions served as objective results. Subjective feedback was also collected through surveys during and after the trials. Volunteers were given only 5 minutes of instruction and were allowed short familiarization with the prototype. The tests were designed in such a way as to provide simultaneous training by growing in complexity. First the users learned to locate single obstacles, than two at once, next navigate between two obstacles to reach a sound source, finally to navigate between four obstacles in the corridor. Photos from the trial are shown in Fig. 4 and videos are available in the media section.

Current research focuses on construction of a more portable prototype that could be worn in the form of large sunglasses. Trials in outdoor environments are foreseen for the summer of 2012.